Namaste! Last week I had the pleasure of teaching at Data Science Retreat again. After introducing the current batch to the magic of Deep Learning a couple of weeks ago, I came back. AI-Guru returns. And I brought something great with me: Autoencoders, Generative Adversarial Networks and Triplet Loss. Something you might call Next Generation Deep Learning!

Warming up the crowd with Transfer Learning.

Did you know that you do not need a lot of data for Deep Learning in some cases? This was a big idea I explained during class. Warming up. Let us consider the image processing domain for a second. Here it is true that you need a lot of images for fitting the net. It is also true that training could take quite some time. Even on a gigantic GPU-cluster. But there is a principle that lets us train even on a (heavily GPU-underpowered) MacBook Pro or similar tool. With only a couple of images. Overnight. This principle is called Transfer Learning

.

Transfer Learning can be very handy. You just download a pre-trained Neural Network. There is multiple options available on the Internet. MobileNet, Inception, and VGG19 – just to name a few. What to do after dowloading?Train the net on your data. Why is this so effective, you might ask? Well… The pre-trained network is already very, very good at feature extraction. This is the part of the net that needs quite some training. Quite a lot. Once this is up and running, everything else – classification and regression – is just a piece of cake.

Very advanced stuff. A look at Representation Learning.

After Transfer Learning came Enter Recommender Systems. Those are perfect examples for use-cases in Representation Learning. Not the only one! There is more to that… Recommenders are definitely something that show the power of the approach.

Imagine that you got a sample of something you like. Could be a picture, a piece of music, a book. The sky is the limit. How could you find other samples that are similar? Usually your sample is way too big for a very naive „computing all distances between your sample and all reference samples“. Embeddings help a lot here.

An Embedding is a means to embed any sample into something called a latent space. In its essence this process is a radical downsizing in numbers while keeping track of the semantics. And this is the crucial point. An image that you like might be very huge in size. Millions and millions of numbers representing the per-pixel-values of your holiday-photos. What if you could represent the semantics of a photo in just 100 or even 10 numbers? Perfectly possible with Representation learning. Extracting feature vectors from your samples and then finding neighbours by doing some distance-mathe-magic. Piece of cake. But how to get the feature vector in latent space?

Autoencoders and Variational Autoencoders.

Autoencoders are the bomb! Especially when they are variational. Autoencoders are Neural Networks with a bottleneck in the middle. In this bottleneck lives the latent space. Do you know what makes them so very awesome? You train them in an unsupervised way! No need for labels. Autoencoders are in the domain of Unsupervised Learning. Just use the data you got and do something good with it.

My own personal focus is always Variational Autoencoders. While plain Autoencoders are quite good, their latent space is usually not very exciting. It is not nicely clustered and embeddings in latent space cannot be interpolated in a meaningful way.

Variational Autoencoders are better. Like plain Autoencoders they have two components. An Encoder that embeds your samples into latent space and a Decoder that takes vectors from latent space and generates samples from that. Yes, you are exactly right. You have a great idea now: Yes, the Decoder of an VAE can be used for creating new data. But this is another topic… And the output of the Encoder can be used for distance-measurements. How far is is picture 1 away from picture 2? Just do the euclidean of cosine distance math in latent space. Beautiful!

Generative Adversarial Neural Networks.

GANS! The most successful Neural Nets when it comes to artificial data generation! The idea is very, very simple. You create a Generator. This fella is a Neural Net that generates samples. For example pictures of celebrities. And you create a Discriminator. Its only task is to discriminate between fakes and no-fakes. You take both nets and throw them into a competitive environment. They get trained at the same time. After a while your Discriminator gets really good at finding fakes. And if you do it right, your Generator gets even better at creating fakes! Once you have reached this stage you basically throw away the Discriminator and do something great with the Generator.

Meet your endboss: Triplet-Loss.

In Deep Learning there is a Bowser to your Mario. It is called Triplet Loss (TL). The idea is very simple. You come up with a base network. The input is your samples. The output is some latent space. How many dimensions this latent-space has is a hyper-parameter. Then you make sure that you have three instances of your base model connected. With shared weights. This is the crucial part.

How do you train TL? You need a sampling strategy. This is a seemingly complex algorithm that selects good samples for training. You basically train on triplets: Anchor, positive and negative. Imagine you would love to create a neural network that robustly identifies all your colleagues by their faces. You randomly select an anchor. Say a picture of your buddy Pascal. Then you select another picture of Pascal. This is the positive. And finally you select a picture of Deniel. This is your negative.

What does training mean once you are capable of creating anchor-positive-negative samples? You make sure that the latent-distance between the two Pascal photos is as small as possible. All while making sure that the latent-distance between Pascal and Deniel is as big as possible. You get that? Right, it basically creates clusters for your classes while pushing away other classes. This is a fine piece of algorithm.

Triplet Loss is King Koopa of Deep Learning. It is the master embeddor in Deep Learning. And I tell you… It is quite an endeavour to implement it. I did it. Three times. All blood, sweat and tears. And the final time, I moved TL into my Deep Learning library NGDLM. All for the sake of reusability. Why? I want YOU to apply TL with less than 30 lines of code and not with more than 350…

NGDLM stands for Next Generation Deep Learning Models.

I really love sharing as much as possible with you. Especially everything that is practical in nature. This is my I came up with NGDLM. This is short for „Next Generation Deep Learning Models“. It is a tiny little library that helps you create and train Autoencoders, Generative Adversarial Nets, Triplet Loss and others with the least amount of code as possible. It is build on top of Keras.

NGDLM is all about Deep Neural Networks that are beyond simple Feed-Forward Networks. On my learning pilgrimage I found out that building those complex nets is comparable to building pyramids. Trust me, when I say that. I did build pyramids in code quite a couple of times. If you like have a look at NGDLM on GitHub.

Deep Reinforcement Learning might be next…

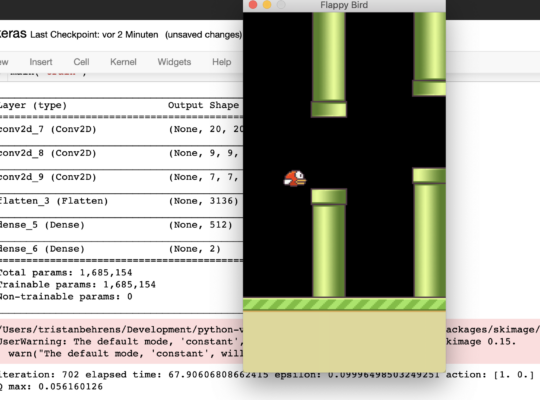

Whoa. Again I had a lot of fun in Berlin. The students at Data Science Retreat are always very eager for learning more. Learning more is the reason why I came back. I usually do not teach more than one block. This time I did and it was awesome. Thanks everybody! This leaves me with the final question: What might be next? To reveal a secret: I am currently studying Deep Reinforcement Learning.

Stay in touch.

I hope you liked the article. Why not stay in touch? You will find me at LinkedIn, XING and Facebook. Please add me if you like and feel free to like, comment and share my humble contributions to the world of AI. Thank you!

If you want to become a part of my mission of spreading Artificial Intelligence globally, feel free to become one of my Patrons. Become a Patron!

A quick about me. I am a computer scientist with a love for art, music and yoga. I am a Artificial Intelligence expert with a focus on Deep Learning. As a freelancer I offer training, mentoring and prototyping. If you are interested in working with me, let me know. My email-address is tristan@ai-guru.de - I am looking forward to talking to you!