Namaste! I am very excited about this article. I did some experiments in the field of Deep Learning and Psychology. Applying Deep Learning enabled Natural Language Processing on psychological character typing. Yes, I could now tell your character from what you write using a Deep Neural Network! I would 😉

First and foremost, I am very sorry. I have been quite busy in the last couple of months. I did not have that much time writing articles. This is another reason why I am so excited. Writing is a pleasure for me.

The Deep Learning and Psychology long story short.

Let me be a little anticlimactic. Darth Vader is Luke Skywalker’s father. And if you combine the Myers-Briggs Type Indicator (MBTI) with data from reddit, glue it together with fast.ai, and add some heavy BERT you will end up with an 85% validation-accuracy on introvert-extrovert classification.

„Why would I do such a thing?“, you might ask. And rightfully so. In Natural Language Processing, you often run into an application called Sentiment Analysis. You can think of it as getting the current mood out of a text. For example, given an article, is it a positive one or a negative one? Or given an email, is the sender happy, neutral, sad, or even angry? The area of potential use cases here is limitless.

„How would I do such a thing?“. Again a rightful question. A sketched answer would be as follows. Get a labeled dataset. Create a Neural Network. Train the Neural Network on the data. If the Neural Network has a good accuracy, deploy it to production. Most of this we are going to touch today. But first…

Acknowledgements.

All this would not have been possible without the work of others. All human endeavors are collective ones. Works of different people all over the world get improved, extended and combined in many ways. And this is especially true for AI.

Vladimir Dyagilev in his article Using Deep Learning to Classify a Reddit User by their Myers-Briggs Personality Type laid the groundwork for this publication. As did Keita Kurita in his article Paper Dissected: “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” Explained. Vladimir showed how to use data from reddit to create a Myers-Briggs-classifier. And Keita showed how easy it is to use BERT in fastai.

Did you know...

That you can book me for trainings?That I could be your mentor?

Feel free to get in touch anytime.

Finding the dots to connect…

This article deals with multiple topics. Let us consider each one individually.

About psychological character typing.

Psychological typology deals with personality types. That boils down to being able to classify different types of characters. Of course, we can safely assume that each individual is unique. But each individual also shares traits with others. And the amount of sharing allows us to type people. Applications are self-development, team-building, counseling, therapy, marketing, and career-building. This list is definitely not exhaustive. You can definitely use it for bad. But the positive use cases seem to be more in numbers.

The Myers-Biggs Type Indicator comes with four categories. Namely, Introversion/Extraversion, Sensing/Intuition, Thinking/Feeling, Judging/Perception. It is also assumed that for each individual, in all of the four categories, you find one component to be dominant. This gives you a total of 16 different types. One would be for example introversion, intuition, thinking and, judging. Short INTJ, which is exactly my personality type. Now that you know we a little better, or at least have some new inspiration for searching something on the internet, it is time to move to fastai. Which is something that amazes me a lot these days.

About fast.ai.

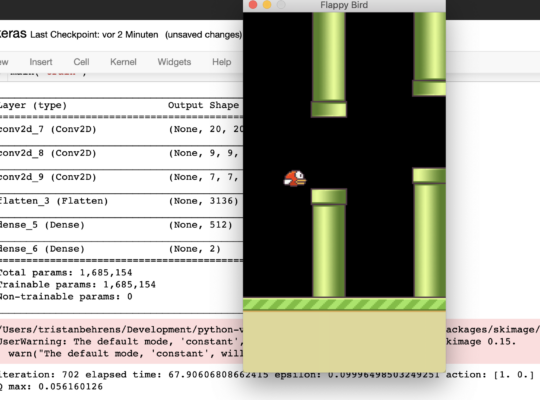

fast.ai is on a quest to making Neural Networks uncool again. That is what the team behind it says on their homepage. One component of their crusade is fastai. No dot. Their programming library. What I really liked while experimenting is the high-level building blocks approach. You just connect a couple of pieces dedicated to purposes such as data-processing, training, evaluating, and visualization. This makes your whole programming effort quite slim.

Let us just consider the MNIST example, which I happily copy from their tutorial, because it is very speaking and very elegant. MNIST is the „Hello, world!“ of machine learning. It is about handwritten digits recognition. Here is the fastai implementation:

path = untar_data(URLs.MNIST_SAMPLE) data = ImageDataBunch.from_folder(path) learn = cnn_learner(data, models.resnet18, metrics=accuracy) learn.fit(1)

That is all! These four lines of code download the data, make it accessible, instantiate a Convolutional Neural Network with the resnet18 architecture, and train it. Four lines of code. And I thought Keras was code-effective just a short while ago…

What I strongly believe makes a lot of sense, is the OOP patterns behind fastai. You immediately feel that those best practices really make a difference. But it also forces you to think even more in landscapes of interacting objects than just procedural top-to-bottom code. But I guess many people learn that quite quickly.

About BERT.

BERT made quite some impact at the end of 2018. BERT is short for Bidirectional Encoder Representations from Transformers. You can think of it as word embeddings on steroids. Word embedding are those comparatively tiny little mappings from words to word-vectors. They are very powerful nontheless. They allow you to do math on words and texts. Finding closest words, finding opposites, computing a numeral representation of the semantic content of a text… But word embeddings they are also quite limited in what you can do with them. BERT allows for contextual word embeddings. This means that each word is not mapped to a single vector. Rather words can be mapped to vectors that also contain knowledge about their context. You know that depending on which words are next to a single word, the meaning of that word might and usually will differ.

Generally, you would use BERT in a transfer learning scenario to improve the accuracy of your Neural Network. This, of course, cuts down the required number of samples to train on in your specific use case. That is the whole deal of using pre-trained models. And it is really easy to use BERT in fastai. There is a package available called pytorch_pretrained_bert. Just a little code:

from pytorch_pretrained_bert.modeling import BertForSequenceClassification model = BertForSequenceClassification.from_pretrained(‘bert-base-uncased’, num_labels=6)

Voila! This gives you a model that you can train. On your data. Using BERT-Embeddings that are context-sensitive. Now all that remains is dome data processing effort.

Putting it all together.

In my GitHub repository you will find a notebook that allows you to train a BERT-based classifier on MBTI data. What I did, was focussing on a simple classifier: Finding out if someone to whom a text belongs is an introvert or an extrovert. In MBTI this means focussing on the first letter of the typing system, i and e. Fairly easy. It took only a little preprocessing to feed the data into a model for training. And the results were very promising. Look at the losses and validation accuracy:

The losses tell you that after just a couple of epochs the model goes into overfitting. I guess this has to do with the size of the dataset. It is rather small. If we check the validation accuracy we see that it end up at around 85%. Means: Out of 100 samples 85 will be correctly classified by the Deep Neural Network as introvert or extrovert. A look at the confusion matrix is also revealing:

Ideally, you would have a dark diagonal. Yes, I guess more data would help.

Thank you very much for reading!

Stay in touch.

I hope you liked the article. Why not stay in touch? You will find me at LinkedIn, XING and Facebook. Please add me if you like and feel free to like, comment and share my humble contributions to the world of AI. Thank you!

If you want to become a part of my mission of spreading Artificial Intelligence globally, feel free to become one of my Patrons. Become a Patron!

A quick about me. I am a computer scientist with a love for art, music and yoga. I am a Artificial Intelligence expert with a focus on Deep Learning. As a freelancer I offer training, mentoring and prototyping. If you are interested in working with me, let me know. My email-address is tristan@ai-guru.de - I am looking forward to talking to you!