Namaste!

The last couple of weeks have been a joyride for me. A joyride of learning new things. Quite a while ago I opened a promising door when I decided to start to learn as much as I can about Deep Reinforcement Learning. My initial motivation was pure curiosity. What is it all about? Today I see use-cases galore and I am looking forward to teaching and to doing projects. I have just published a vlog about my recent findings. The message is: Deep Reinforcement Learning can definitely be applied to Autonomous Driving. And you can get results quickly.

I posted the video on Youtube. Have a look and feel free to like it and subscribe to my channel:

Did you know...

That you can book me for trainings?That I could be your mentor?

Feel free to get in touch anytime.

What is behind Deep Reinforcement Learning?

Deep Reinforcement Learning is a fascinating field. It is not really data-driven like Deep Learning. In Deep Learning a good data-set is always a requirement. Instead Deep Reinforcement Learning is goal-driven. This translates to: In Deep Reinforcement Learning you do not train an intelligent agent with data, instead you teach it good behaviour by providing it with sensory information and objectives.

Such objectives are called rewards. Rewards usually come from the environment when an agent performs an action. The environment is the situation the agent is in. The agent can perceive the environment with sensors. And it can act in it with actuators. Positive rewards reinforce positive behaviours. And negative rewards discourage bad behaviours. I guess you remember this from your childhood.

The good things about Deep Reinforcement Learning.

I am really amazed by one fact: Today it is very, very easy to do experiments with Deep Reinforcement Learning.

You see, the first thing you need is an environment. Something in which your agent will learn intelligent behaviours. Fortunately, there is a huge collection of such environments available on the internet. And it is growing as we speak. For starters, I advise you to have a look at OpenAI Gym. It features a number of environments that will keep you busy for quite some time. And the good thing is: Gym has established itself as a de facto standard for agent-environment-interfaces. This makes things very, very easy.

The second thing that you need is an agent. Your agent is an entity that has a Deep Neural Network at its core. Recently Neural Networks proved to be excellent predictors for actions that maximise the accumulated future rewards. Especially in environments that have a huge state-space. Fortunately, such agents are readily available. Keras-RL is a framework that is based on Keras. It implements seven Deep Reinforcement Learning Agents. And the good thing is: It is absolutely compatible with OpenAI Gym.

What does this mean? Just find a nice Gym-compatible environment, connect it to a Keras-RL agent, tweak some hyperparameters and train your AI. The probability is very high that you will be successful. It has never been so easy before.

My Autonomous Driving exercise.

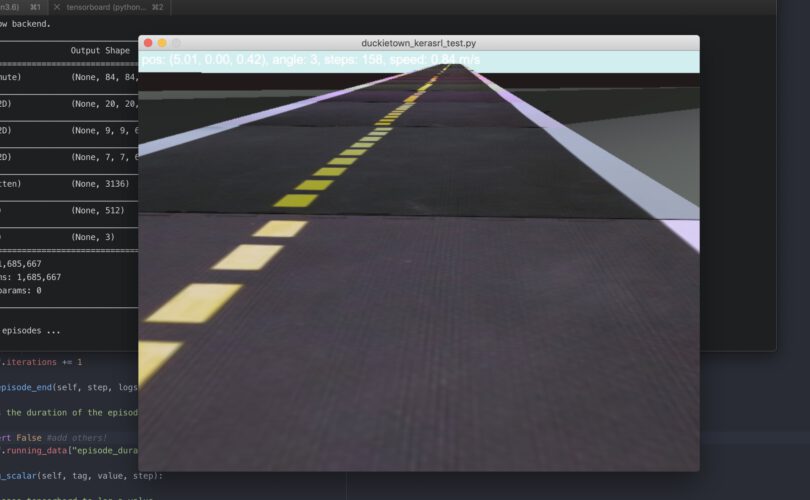

Connecting the technologies. That is exactly what I did. I discovered a Gym-compatible environment based on the Duckietown project. The installation was very easy. It was up and running in no time. Duckietown is both a real-life and simulated environment. It comes in the shape of a miniature town. An excellent development platform and testbed for autonomous driving, don’t you think? And it provides all the great challenges such as lane following, respecting street lights and not running into obstacles.

I connected Keras-RL’s DQN-agent with the Duckietown simulator. Being a huge fan of eating whole elephants (metaphorically) byte by byte, I decided to do simple lane following first. In most cases, it is a good thing to split a big problem into smaller sub-problems and focus on solving those little ones first. Divide and conquer!

Training took a couple of hours on my MacBook Pro. Yes, no GPU. And it worked like a charm.

A summary.

I really love the building blocks approach to AI. So many excellent frameworks are readily available. All you have to do is selecting and connecting properly and you will be doing great AI in no time. For example, lane following in Autonomous Driving. Be brave. Do not hesitate. Try things out. And speak about it. This will be very rewarding!

Stay in touch.

I hope you liked the article. Why not stay in touch? You will find me at LinkedIn, XING and Facebook. Please add me if you like and feel free to like, comment and share my humble contributions to the world of AI. Thank you!

If you want to become a part of my mission of spreading Artificial Intelligence globally, feel free to become one of my Patrons. Become a Patron!

A quick about me. I am a computer scientist with a love for art, music and yoga. I am a Artificial Intelligence expert with a focus on Deep Learning. As a freelancer I offer training, mentoring and prototyping. If you are interested in working with me, let me know. My email-address is tristan@ai-guru.de - I am looking forward to talking to you!